Recently we’ve been experimenting around using TeamCity as a Debian repository and we’d like to share some tips and tricks we’ve come up with in the process.

We used the TeamCity tcDebRepository plugin to build *.deb packages and serve package updates to Debian/Ubuntu servers. The plugin, the special prize winner of the 2016 TeamCity plugin contest, works like a charm, but we’ve encountered a few difficulties with the software used to build and package .deb files, hence this blog post.

It’s important to note that tcDebRepository is not capable of serving packages to recent Ubuntu versions due to the lack of package signing. This is planned to be resolved in version 1.1 of the plugin (the plugin compatibility details).

We do not intend to cover the basics of TeamCity and Debian GNU/Linux infrastructure. To get acquainted with TeamCity, this video might be helpful. Building Debian packages is concisely described in the Debian New Maintainers’ Guide.

Everything below applies to any other Debian GNU/Linux distribution, e.g. Astra Linux.

Prerequisites and Project Setup

We arbitrarily chose 4 packages to experiment with:

Our setup uses:

- The free Professional version of TeamCity 10 with 3 agents managed by Debian 8.0 (Jessie).

- The tcDebRepository plugin installed as usual.

- Besides the Teamcity agent software, each of the agents required installing:

- the build-essential package as a common prerequisite

- the dependencies (from the

![01-build-depends]() build-depends and

build-depends and ![02-build-depends-indep]() build-depends-indep categories) required for individual packages.

build-depends-indep categories) required for individual packages.

Next we created a project in TeamCity with four build configurations (one per package):

![teamcity-build-configs]()

Configuring VCS Roots

When configuring VCS Roots in TeamCity, types of Debian GNU/Linux packages had to be taken into account.

Debian packages can be native and non-native (details).

The code of native packages (e.g. debhelper, dpkg), developed within the Debian project, contains all the meta-information required for building (the debian/ directory in the source code tree).

The source code of non-native packages (e.g. bash) is not related to Debian, and an additional tree of their source code and meta-information with patches (contained in the debian/ directory) has to be maintained.

Therefore, for native packages we configured one VCS Root (pointing to Debian); whereas non-native packages needed two roots.

Next we configured checkout rules: the difference from the usual workflow is that in our case the artifacts (Debian packages) will be built outside the source code, one directory higher. This means that the checkout rules in TeamCity should be configured so that the source code of the dpkg package is checked out not into the current working directory, but into a subdirectory with the same name as the package, i.e. pkgname/. This is done by adding the following line to the checkout rules:

+:.=>pkgname

Finally, a VCS trigger was added to to every configuration.

Artifact paths

In terms of TeamCity, the binary packages we are about to build are artifacts, specified using artifact paths in the General Settings of every build configuration:

![03-teamcity-debian-general-artifact-paths]()

For native packages, the pkgname.orig.tar.{gz,bz2,xz,lzma} and pkgname.debian.tar.{gz,bz2,xz,lzma} will not be created.

Build Step 1: Integration with Bazaar

Building a package with Bazaar-hosted source code (bash in our case) requires integration with the Bazaar Version Control System not supported by TeamCity out-of-the box. There is an a 3rd party TeamCity plugin to support Bazaar, but it has at least two problems:

- agent-side checkout is not supported

- the plugin is based on

bzr xmlls and bzr xmllog (the bzr commands invoked by the plugin), but these commands are provided by external modules not necessarily available in the default Bazaar installation

The TeamCity server we used is running Windows, so we decided not to opt for installing Bazaar on the server side. Instead, to build the bash package, we added a build step using the Command Line Runner and a shell script for Bazaar integration:

#!/bin/bash

export LANG=C

export LC_ALL=C

set -e

rm -rf bash/debian

bzr branch http://bazaar.launchpad.net/~doko/+junk/pkg-bash-debian bash/debian

major_minor=$(head -n1 bash/debian/changelog | awk '{print $2}' | tr -d '[()]' | cut -d- -f1)

echo "Package version from debian/changelog: ${major_minor}"

tar_archive=bash_${major_minor}.orig.tar

rm -f ${tar_archive} ${tar_archive}.bz2

# +:.=>bash checkout rule should be set for the main VCS root in TeamCity

tar cf ${tar_archive} bash

tar --delete -f ${tar_archive} bash/debian

# Required by dpkg-buildpackage

bzip2 -9 ${tar_archive}This approach will not allow us to see the changes in one of the two trees of the source codes and run build automatically on changes, but it is ok as the first try.

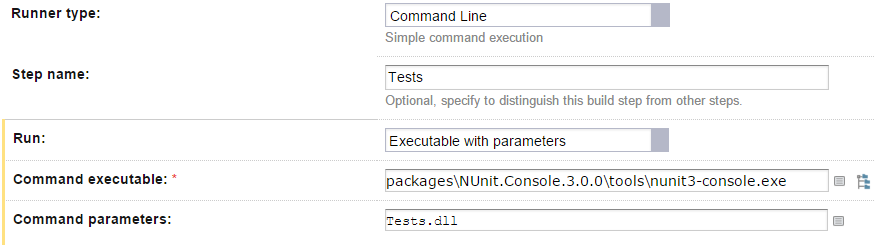

Build Step 2. Initial configuration

We used the Command Line Runner to invoke dpkg-buildpackage. The -uc and -us keys in the image below indicate that digital signatures are currently out of scope for our packages. If you wish to sign the packages, you’ll have to load the corresponding pair of GnuPG keys on each of the agents.

Note that the “Working directory” is not set to the current working directory, but to the subdirectory with the same name as the package (bash in this case) where the source code tree will be checked out and dpkg-buildpackage will be executed. If the VCS Root is configured, the “Working directory” field can be filled out using the TeamCity directory picker, without entering the directory name manually:

![07-teamcity-debian-dpkg-buildpackage-step]()

After the first build was run, we encountered some problems.

Build problems resolution

Code quality

For some packages (bash in our case), two code trees were not synchronized, i.e. they corresponded to slightly different minor versions, which forced us to use tags rather than branches from the main code tree. Luckily, TeamCity allows building on a tag and the “Enable to use tags in the branch specification” option can be set in such case:

![teamcity-vcs-tags-in-branch-specs-small]()

Dependencies

Here is another problem we faced. When running the first build, dpkg-buildpackage exits with code 3 even though all the required dependencies were installed (see the prereqs section above).

There are a few related nuances:

- The most common case for continuous integration is building software from Debian Unstable or Debian Experimental. So, to meet all the dependencies required for the build, TeamCity agents themselves need to run under Debian Unstable or Debian Experimental (otherwise dpkg-buildpackage will be reporting that your stable dependencies versions are too old). To resolve the error, we added the

-d parameter:

dpkg-buildpackage -uc -us -d

- A special case of old dependencies is the

configure script, created by the GNU Autotools that are newer than those currently installed on your system. dpkg-buildpackage is unable to detect this, so if the build log shows messages about the missing m4 macros, you need to to re-generate the configure script using the of GNU Autotools currently installed on the agent. This was done by adding the following command as the build step executed before dpkg-buildpackage:

autoreconf -i

Broken unit tests

When working with Debian Unstable or Debian Experimental, unit test may fail (as it happened in our case). We choose to ignore the failing test and still build the package by running dpkg-buildpackage in a modified environment:

DEB_BUILD_OPTIONS=nocheck dpkg-buildpackage -uc -us

Other build profiles are described here.

Final Stage

After tuning all of the build configurations, our builds finally yielded expected artifacts (the dpkg package is used as an example):

![11-teamcity-debian-artifacts]()

If you do not want each incremental package version to increase the number of artifacts of a single build (giving you dpkg_1.18.16_i386.deb, dpkg_1.18.17_i386.deb, etc.), the working directory should be selectively cleaned up before starting a new build. This was done by adding the TeamCity Swabra build feature to our build configurations.

Tuning up Debian repository

The tcDebRepository plugin adds the “Debian Repositories” tab in the project settings screen, which allows you to create a Debian repository. Once the repository is created, artifact filters need to be configured: at the moment each filter is added manually. Filters can be edited, deleted or copied.

![teamcity-adding-an-artifact-filter]()

The existing artifacts will not be indexed, therefore after the configuration of the Debian repository, at least one build must be run in every build configuration. Then we can view the available indexed packages:

![16-teamcity-debian-debrepo-page-2]()

When adding the repository to the /etc/apt/sources.list, all these packages are visible on the client side. Note that the packages are not digitally signed, which prevents packages from being available on recent versions of Ubuntu. Package signing is planned for tcDebRepository version 1.1.

![17-teamcity-debian-aptitude-1]()

(!) If you are compiling for several architectures (i386, x32, amd64, arm*), it is worth having several build configurations corresponding to the same package with different agent requirements, or, in addition to the VCS Trigger, using the Schedule Trigger with the “Trigger build on all enabled and compatible agents” option enabled.

![20-teamcity-debian-change-log]()

Happy building!

* We’d like to thank netwolfuk , the author of the tcDebRepository plugin, for contributing to this publication.

This article, based on the original post in Russian, was translated by Julia Alexandrova.

The .NET Core development platform is the next wave of .NET ecosystem evolution which brings cross-platform targets for your existing or new products. Microsoft ships .NET Core SDK packages with a runtime for

The .NET Core development platform is the next wave of .NET ecosystem evolution which brings cross-platform targets for your existing or new products. Microsoft ships .NET Core SDK packages with a runtime for

build-depends and

build-depends and  build-depends-indep categories) required for individual packages.

build-depends-indep categories) required for individual packages.

We are happy to announce that now TeamCity provides a tight integration with Google Cloud services. The

We are happy to announce that now TeamCity provides a tight integration with Google Cloud services. The